In an evolving digital landscape, Meta, the conglomerate behind platforms like Facebook, Instagram, WhatsApp, and Threads, has declared its intent to discontinue its third-party fact-checking program in the U.S. This move heralds a significant shift in how misinformation is managed on social media, transitioning to a user-driven “Community Notes” system akin to X’s (formerly Twitter) approach. This change has sparked both excitement and concern within the fact-checking community and among those invested in the integrity of online information.

The End of Meta’s Fact-Checking Era

Meta’s fact-checking initiative, introduced in 2016, was once celebrated for its pioneering efforts against misinformation. The program collaborated with more than 90 fact-checking entities across the globe, supporting content verification in over 60 languages. However, Meta CEO Mark Zuckerberg recently pointed to “excessive censorship” and “political bias” as reasons for its termination. In an explanatory video, Zuckerberg underscored the company’s dedication to free speech, arguing that Community Notes would democratize the process of adding context to posts, lessening dependence on professional fact-checkers.

The decision has left many organizations scrambling. Neil Brown from the Poynter Institute, which manages PolitiFact, voiced his concerns, emphasizing that “facts are not censorship,” and that the power to remove content always rested with Meta, not fact-checkers (Poynter).

Alphabet’s Stance Against EU Fact-Checking Mandates

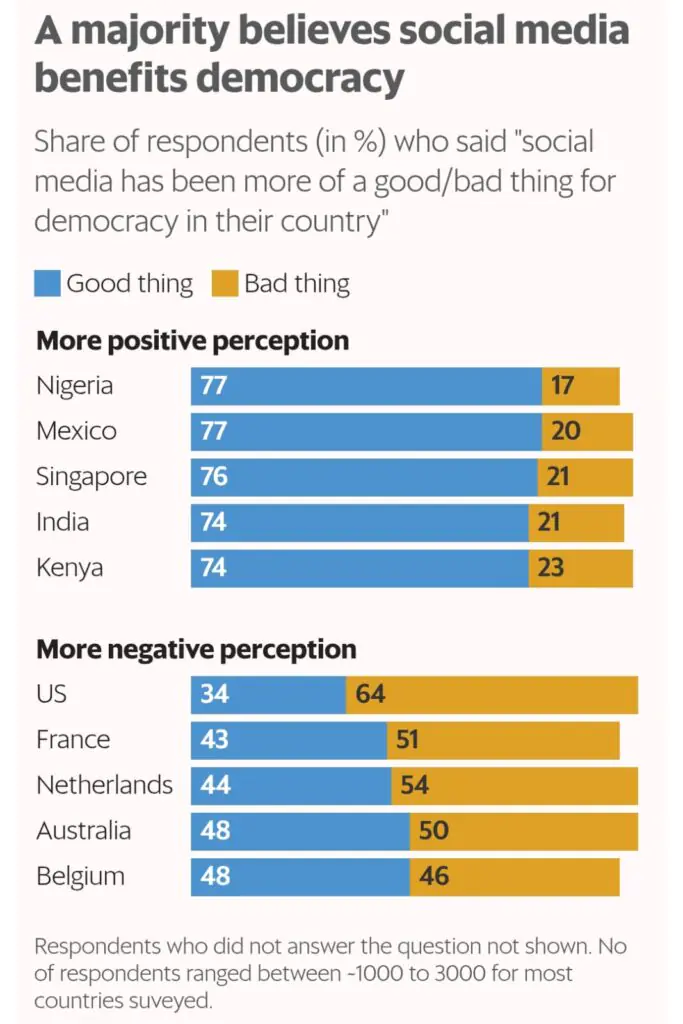

Parallel to Meta’s move, Alphabet, the parent entity of Google and YouTube, has opted out of mandatory fact-checking for Google Search and YouTube under new EU regulations. Kent Walker, Alphabet’s global affairs president, stated that fact-checking wasn’t suitable for their service model. This resistance comes at a critical juncture, with the EU facing multiple elections in 2025, where misinformation could significantly impact democratic processes. A European Commission report reveals that 65% of Europeans are anxious about misinformation on social platforms during elections (European Commission).

The Emergence of Community Notes

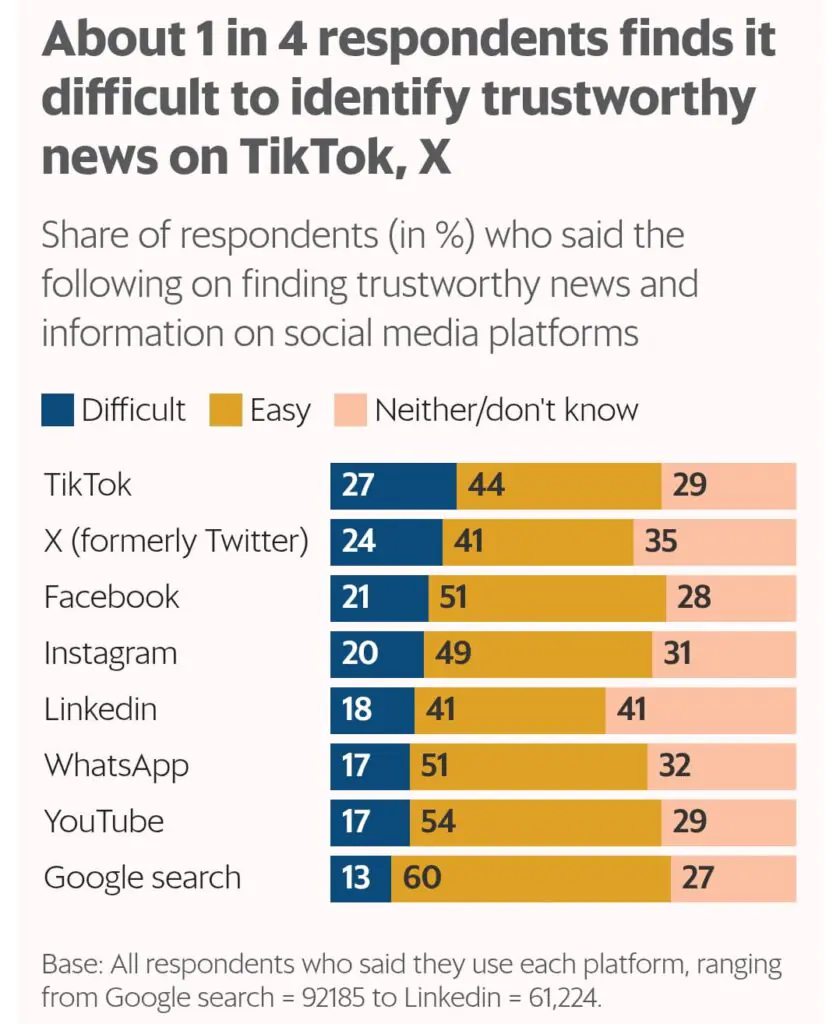

Meta’s embrace of Community Notes follows X’s lead, where users can anonymously flag and contextualize misleading content. While this system has shown promise in curbing misinformation, it’s not without its pitfalls. Research suggests that while it can reduce the visibility of false information, the response time is often slow, allowing misinformation to spread initially. Moreover, there’s a risk of introducing bias, as users might flag content based on political disagreement rather than factual inaccuracy. A University of Cambridge study from 2024 found that only 40% of flagged posts on X received timely corrections, leaving much misinformation unaddressed (Cambridge University).

Global Misinformation Landscape

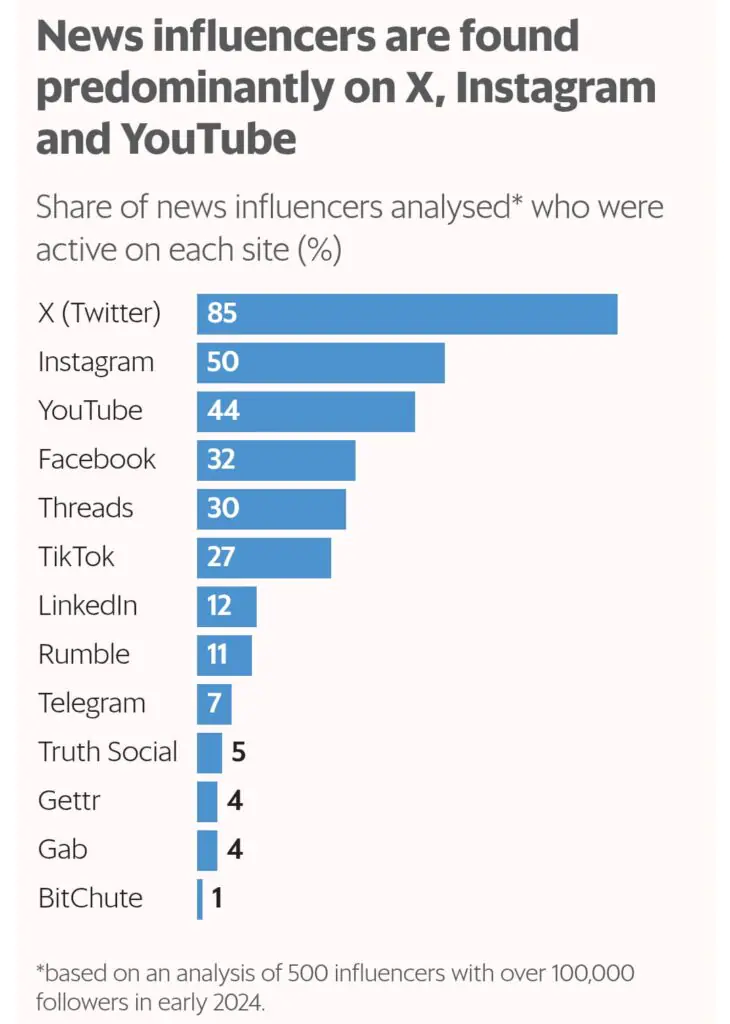

The retreat from centralized fact-checking coincides with a global uptick in misinformation, recognized by the World Economic Forum as a significant threat to stability. Social media platforms, with their massive user bases, are pivotal in this scenario, serving both as forums for democratic discourse and conduits for misinformation. A 2024 Reuters Institute report indicated increasing concern among users about distinguishing real from fake news online, with trust in X as a source of reliable news notably low (Reuters Institute).

AI’s Role in the Fact-Checking Arena

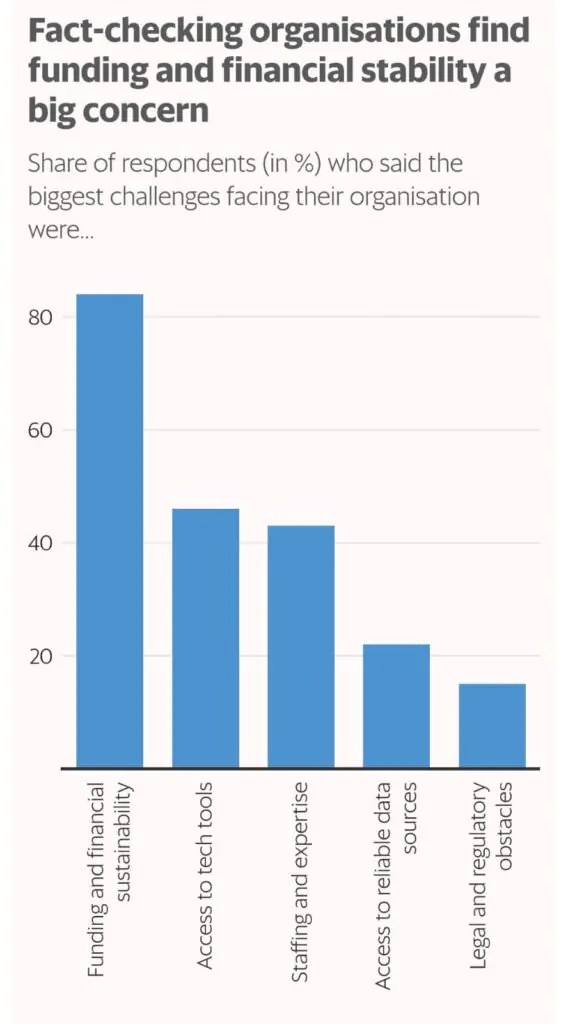

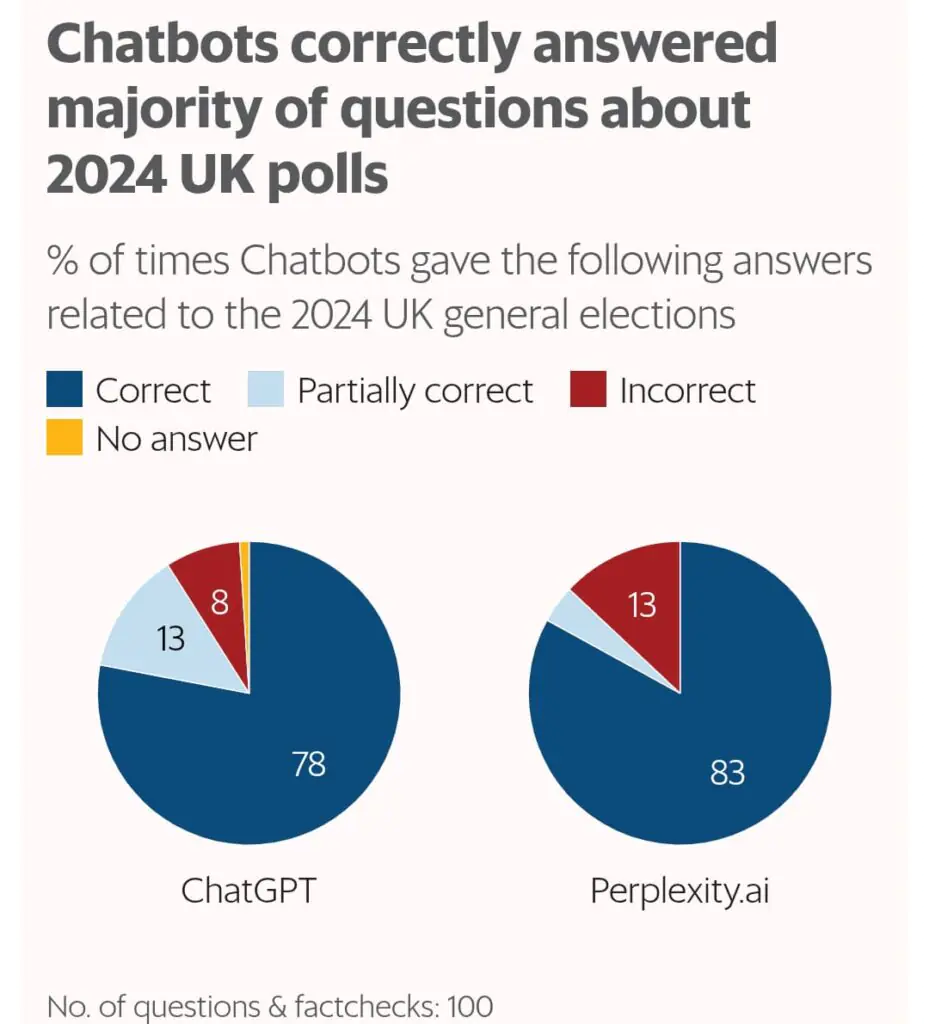

Artificial intelligence presents a frontier in combatting misinformation, with 55% of fact-checking bodies employing AI for preliminary research, according to the IFCN. During the 2024 UK elections, AI models like ChatGPT-4.0 and Perplexity.ai were tested, achieving accuracy rates of 78% and 83% respectively. However, AI’s integration into fact-checking must be cautious, as it can inherit and amplify biases or generate misleading information (IFCN).

A Complex Balance

The transition to user-driven moderation is a nuanced development. It champions user empowerment and counters potential biases of external fact-checkers, yet it also risks enhancing misinformation and eroding trust in digital content. As Meta and Alphabet proceed with these new strategies, the world watches to see how these changes will affect democracy, public health, and social unity.

Key Insights

- Meta’s Community Notes Initiative: The shift to user annotations signifies a broader movement towards decentralized content moderation but questions remain about its effectiveness against misinformation.

- Alphabet’s Regulatory Pushback: Alphabet’s defiance of EU fact-checking mandates underscores a broader tech-industry resistance to regulatory oversight, potentially affecting information quality during key democratic moments.

- The Misinformation Crisis: The global scale of misinformation continues to grow, with social media platforms at the epicenter, demanding innovative solutions beyond traditional fact-checking.

- AI in Fact-Checking: AI offers promising tools for fact-checking, but human judgment remains essential to correct for biases and ensure ethical use.

- Future of Digital Trust: With less centralized control, the onus is on users to navigate the truth, potentially leading to either more engaged, informed communities or further societal division.

As we move forward, the trajectory of online information integrity will depend on how these platforms balance user freedom with the need for accuracy and trust.

www.howindialives.com is a database and search engine for public data.